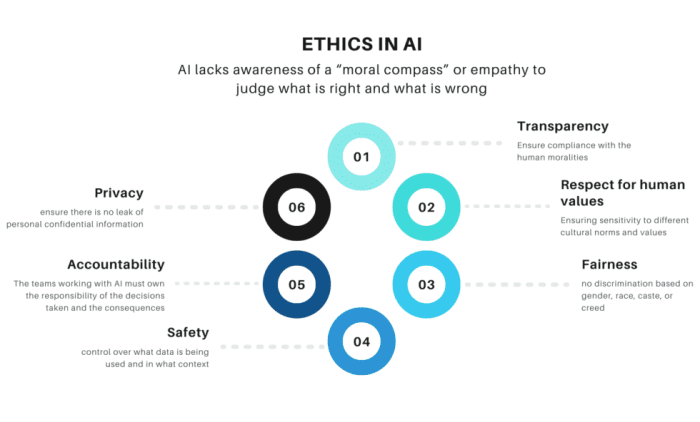

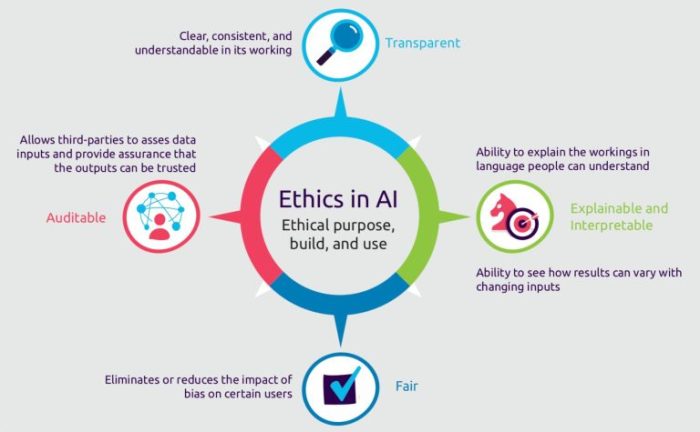

Ethics in artificial intelligence is a rapidly evolving field, crucial for shaping the responsible development and deployment of AI systems. From ensuring fairness and mitigating bias to addressing privacy concerns and societal impacts, this exploration delves into the complex ethical considerations surrounding AI.

This comprehensive overview examines key ethical frameworks, analyzes potential biases in algorithms and datasets, and explores the importance of transparency and accountability. The discussion also touches on the impact of AI on healthcare, autonomous systems, and international cooperation, providing insights into future challenges and potential solutions.

Defining Ethical Frameworks in AI

Ethical considerations are paramount in the development and deployment of artificial intelligence. AI systems are increasingly impacting various aspects of human life, from healthcare and finance to law enforcement and education. Therefore, establishing robust ethical frameworks is crucial to ensure that these systems are used responsibly and benefit society as a whole. These frameworks provide a structured approach to navigate the complex ethical dilemmas inherent in AI.

Different Ethical Frameworks

Various ethical frameworks offer distinct perspectives on how to approach AI development and deployment. These frameworks provide a structured approach to understanding and addressing the moral implications of AI systems.

- Utilitarianism focuses on maximizing overall happiness and well-being. This framework assesses the consequences of an action and selects the option that produces the greatest good for the greatest number of people. In the context of AI, a utilitarian approach might prioritize AI systems that improve public health or increase economic productivity, even if individual rights are slightly compromised in the process.

A key strength is its potential to address societal needs efficiently, while a weakness is the difficulty in accurately predicting and evaluating the long-term consequences of AI actions, particularly when dealing with unforeseen circumstances.

- Deontology emphasizes adherence to moral duties and rules. This framework suggests that certain actions are inherently right or wrong, regardless of their consequences. In the context of AI, a deontological approach might prioritize ensuring fairness and transparency in algorithms, even if it means sacrificing some efficiency or potential gains. Strengths lie in its ability to provide clear guidelines for ethical conduct, while weaknesses include potential inflexibility in addressing complex situations where ethical rules may conflict.

Ethical considerations in AI are crucial, particularly as AI systems become more sophisticated. Fashion trends, like the resurgence of high-waisted jeans, High-waisted jeans , can also offer interesting parallels. Ultimately, responsible AI development needs to consider broader societal impacts, much like designers need to consider the ethical implications of trends like these.

- Virtue Ethics focuses on the character and moral virtues of the individuals involved in AI development and deployment. This framework suggests that ethical actions stem from virtuous character traits, such as honesty, compassion, and fairness. In AI, this framework might promote the development of AI systems that align with human values and promote ethical decision-making. A strength of this framework is its emphasis on the human element, but a weakness is the challenge in defining and measuring virtues in a consistent manner across cultures and contexts.

Comparison of Frameworks

Different ethical frameworks provide distinct approaches to addressing bias, fairness, and accountability in AI. These frameworks offer diverse lenses through which to examine the ethical implications of AI systems.

| Framework | Bias | Fairness | Accountability |

|---|---|---|---|

| Utilitarianism | Potentially perpetuates existing biases if they lead to greater overall good. | Aims for fairness in aggregate outcomes, but individual cases might be overlooked. | Accountability rests on evaluating the overall impact of AI systems. |

| Deontology | Prioritizes avoiding biased outcomes through explicit rules and guidelines. | Stresses fair treatment based on moral principles, irrespective of outcome. | Emphasizes clear lines of responsibility and adherence to rules. |

| Virtue Ethics | Promotes development of AI systems by virtuous individuals, reducing the likelihood of biased outcomes. | Fairness is inherent in virtuous actions. | Accountability stems from individual moral character and responsibility. |

Key Principles of Ethical Frameworks

The following table summarizes the key principles of each framework. Understanding these principles helps in applying them effectively to AI development.

| Framework | Key Principles |

|---|---|

| Utilitarianism | Maximize overall happiness and well-being. Consider the consequences of actions. |

| Deontology | Adhere to moral duties and rules. Act according to moral principles. |

| Virtue Ethics | Develop virtuous character traits. Act in accordance with virtues. |

Bias and Fairness in AI

AI systems, while powerful, can inherit and amplify biases present in the data they are trained on. This inherent bias can lead to unfair or discriminatory outcomes in various applications, ranging from loan approvals to criminal justice assessments. Addressing these biases is crucial to ensure ethical and equitable use of AI.

Potential Sources of Bias in AI Algorithms and Datasets

AI algorithms are trained on datasets. If these datasets reflect existing societal biases, the AI system will likely perpetuate them. These biases can stem from various sources. For instance, historical data may reflect discriminatory practices, leading to skewed outcomes. Inadequate representation of certain demographics in the training data can result in poor performance or even outright discrimination against those groups.

Furthermore, the algorithms themselves can be designed in ways that introduce bias, even if the data is seemingly unbiased.

Methods to Mitigate Bias in AI Systems

Various techniques can be employed to mitigate bias during both the training and deployment phases of AI systems. These methods aim to identify and rectify problematic patterns within the data and algorithms. Techniques include data augmentation to increase the representation of underrepresented groups, algorithmic adjustments to reduce bias in model outputs, and careful monitoring of system performance to detect and rectify emerging biases.

Moreover, the use of fairness-aware metrics during training and testing is critical to evaluating the extent to which an AI system exhibits fairness.

Fairness in AI: Challenges and Considerations

Defining fairness in AI is a complex challenge. Different stakeholders may have conflicting notions of fairness. What one group considers fair, another might deem unfair. Moreover, there’s no universally accepted measure of fairness. The concept of fairness needs to be contextualized to specific applications and evaluated based on the potential impact of AI systems on various groups.

Real-World Examples of AI Bias

Several instances of AI systems exhibiting bias have been documented in the real world. For example, facial recognition systems have demonstrated a higher error rate for people with darker skin tones, leading to misidentification and potential discrimination. Loan applications have shown bias against certain demographic groups, resulting in unequal access to financial resources. Furthermore, AI systems used in the criminal justice system have displayed bias in predicting recidivism rates, potentially impacting sentencing decisions.

Bias Detection Methods

| Method | Description | Effectiveness |

|---|---|---|

| Data Analysis | Examining the data for patterns of underrepresentation or overrepresentation of certain groups. This might involve analyzing demographic distributions and identifying potential imbalances. | High, but can be subjective without further analysis. |

| Algorithmic Auditing | Analyzing the algorithms themselves for potential biases, such as through the use of fairness metrics and through visual inspection of feature importance. | Medium to high, dependent on the quality of the metrics used. |

| A/B Testing | Testing different versions of an AI system to evaluate the impact on various groups. | High, as it measures real-world impact, but time-consuming. |

| Human Review | Having human experts evaluate the AI system’s output and identify potential biases. | High, as human intuition and contextual understanding are key. |

Transparency and Explainability in AI

Transparency and explainability are crucial aspects of ethical AI development. These principles ensure that AI systems’ decision-making processes are understandable and trustworthy to users. Without transparency, it’s challenging to identify and address potential biases or errors in the system, making it difficult to build public trust and ensure accountability. This understanding is vital in various applications, from loan approvals to medical diagnoses.

Importance of Transparency and Explainability

AI systems are increasingly used in critical areas of life, influencing everything from loan applications to medical diagnoses. The opacity of many AI systems raises concerns about fairness, accountability, and trust. A lack of transparency can lead to unfair or inaccurate outcomes, undermining public confidence in these technologies. Consequently, understanding how AI systems arrive at their decisions is essential to building trust and ensuring responsible use.

Methods for Enhancing Explainability

Several techniques aim to make AI decision-making more understandable. These methods focus on providing insights into the reasoning behind AI outputs, allowing humans to comprehend the factors influencing the system’s decisions. Techniques include:

- Feature Importance Analysis: This method identifies the input features that most significantly contribute to the AI’s prediction. For example, in a loan application system, it might highlight credit score and income as the most influential factors in determining loan approval.

- Rule-Based Systems: These systems explicitly define the rules governing AI decision-making. This approach facilitates transparency by providing a clear understanding of the conditions leading to specific outputs. A system for detecting fraudulent transactions, for instance, could explicitly list the rules for flagging unusual activity.

- Interpretable Models: Certain algorithms, like decision trees and linear regression, are inherently more interpretable than complex deep learning models. Understanding the structure of these models can reveal how different input values influence the output. For example, a decision tree for customer churn prediction would visually show the steps taken to reach a conclusion.

Challenges in Achieving Transparency

Complex AI models, especially deep learning models, can be notoriously difficult to interpret. Their intricate structure and vast number of parameters often make it challenging to trace the path from input to output. This opacity can hinder the ability to identify biases or errors within the system. Furthermore, the need for computational resources and expertise in developing and applying these techniques can create obstacles.

Examples of Transparent AI Systems

Several organizations are actively working to develop more transparent AI systems. For example, some loan applications are now employing explainable AI techniques to provide insights into the factors influencing the decision. These systems can help understand the rationale behind the decision, potentially fostering trust and accountability. Likewise, healthcare systems are exploring the use of transparent AI models for diagnostic purposes, allowing doctors to understand how the system arrived at its conclusion.

Techniques for Explainable AI (XAI)

| Technique | Description | Example |

|---|---|---|

| Local Interpretable Model-agnostic Explanations (LIME) | Explains the predictions of any black box model by approximating it locally with a simpler, more interpretable model. | Predicting customer churn. LIME can show which customer features (e.g., tenure, spending) most influenced the prediction. |

| SHapley Additive exPlanations (SHAP) | Provides a unified framework for explaining any model’s prediction by assigning a contribution to each feature. | Assessing risk in insurance. SHAP can show the influence of factors like age, driving record, and claims history on the risk assessment. |

| Partial Dependence Plots (PDP) | Visualize the impact of individual features on the model’s output. | Identifying factors affecting product sales. PDPs can show how sales change with varying product prices, marketing campaigns, or demographics. |

| Feature Importance Analysis | Quantifies the importance of each input feature in determining the model’s output. | Loan applications. Feature importance analysis can highlight the relative importance of factors like credit score and income in the loan approval process. |

Accountability and Responsibility

Ensuring ethical AI development and deployment necessitates a shared responsibility among developers, users, and organizations. A clear understanding of roles and accountability mechanisms is crucial to prevent misuse and promote trust in AI systems. This involves establishing frameworks that hold stakeholders responsible for unethical practices, while simultaneously fostering a culture of ethical AI development.

Roles of Stakeholders in Ethical AI, Ethics in artificial intelligence

Defining the specific responsibilities of developers, users, and organizations is fundamental to ethical AI. Developers bear the primary responsibility for designing and implementing AI systems with ethical considerations in mind. Users are equally important, as their interactions with these systems shape their impact and influence. Organizations, as the entities owning and deploying these systems, need to establish clear guidelines and oversight mechanisms to ensure ethical operation.

- Developers are accountable for designing AI systems that align with ethical principles. This includes thorough risk assessment, proactive identification of potential biases, and implementing robust mechanisms for monitoring system performance. They must also ensure transparency in their methodologies and algorithms.

- Users are obligated to use AI systems responsibly and ethically. This includes understanding the limitations and potential biases of the system and adhering to any established guidelines. User education and awareness campaigns are essential to fostering responsible usage.

- Organizations are responsible for establishing clear ethical guidelines and policies for AI development and deployment within their operations. They must provide appropriate training and resources for their teams and enforce adherence to these guidelines. This also involves monitoring the performance of AI systems and rectifying any identified issues promptly.

Mechanisms for Holding Stakeholders Accountable

Accountability mechanisms are necessary to deter unethical AI practices. These mechanisms should include clear reporting channels, internal review boards, and external audit processes. Sanctions for violations should be proportionate to the harm caused. Implementing robust oversight and enforcement procedures is key to ensuring ethical practices.

- Internal Review Boards can play a crucial role in assessing the ethical implications of AI projects and ensuring compliance with established guidelines. These boards can be composed of experts from various fields, including ethics, law, and technology.

- External Audits can provide independent verification of AI systems’ adherence to ethical principles. These audits can help identify potential biases or vulnerabilities that internal teams may have missed.

- Clear Reporting Channels facilitate the reporting of unethical AI practices. These channels should be accessible, confidential, and provide a clear path for escalation of concerns.

Legal and Regulatory Frameworks

Legal and regulatory frameworks are emerging to address the ethical challenges posed by AI. These frameworks aim to establish standards for AI development, deployment, and use. The evolution of these frameworks is ongoing and is expected to become more comprehensive and specific in the coming years.

- Existing Laws and Regulations can be adapted to apply to AI systems, but often require careful interpretation and adaptation to address the unique characteristics of AI. Examples include existing laws regarding data privacy and discrimination.

- New Regulations are emerging, with some countries and regions developing specific legislation for AI. These regulations often address issues like algorithmic bias, transparency, and accountability. They may also establish specific roles and responsibilities for different stakeholders involved in AI development and deployment.

Examples of Successful Strategies

Successful strategies for building ethical AI practices often involve a combination of proactive measures and responsive approaches. Organizations are increasingly incorporating ethical considerations into their AI development lifecycles, including incorporating ethical reviews at various stages of development.

- Proactive Ethical Reviews can help identify potential ethical issues early in the development process. This can be done through ethical impact assessments, stakeholder consultations, and the development of comprehensive ethical guidelines.

- Continuous Monitoring and Evaluation can help ensure that AI systems are operating ethically and in line with established principles. This includes mechanisms for detecting and mitigating bias, as well as ensuring transparency and explainability.

Stakeholder Responsibilities Table

| Stakeholder | Responsibility |

|---|---|

| Developers | Design ethical AI systems, assess risks, ensure transparency, and monitor performance. |

| Users | Use AI systems responsibly, understand limitations, and adhere to guidelines. |

| Organizations | Establish ethical guidelines, provide training, enforce compliance, monitor systems, and address issues. |

Privacy and Data Security in AI

AI systems rely heavily on vast amounts of data, raising significant concerns about individual privacy. The collection, storage, and usage of this data must be approached with careful consideration for ethical implications. This section explores the impact of AI on privacy, outlining ethical considerations and methods to protect user data.

Impact of AI on Individual Privacy

AI systems, especially those utilizing machine learning, often require large datasets for training and operation. This data collection can encompass a wide range of personal information, potentially exposing individuals to privacy risks. The potential for misuse or unauthorized access of this data is a significant concern. For example, facial recognition technology, while offering benefits, raises questions about surveillance and potential discrimination.

Ethical Considerations in Data Collection, Storage, and Usage

The ethical use of data in AI systems requires careful consideration of several factors. Data collection practices must be transparent and comply with relevant regulations. Data storage methods should prioritize security and confidentiality, minimizing the risk of breaches. Data usage must be aligned with the initial consent provided by individuals, and any changes in data usage should be clearly communicated.

Furthermore, the use of data must adhere to legal and ethical standards, including avoiding biases that could perpetuate societal inequalities.

Methods to Safeguard User Data Privacy in AI Applications

Several methods can be employed to safeguard user data privacy in AI applications. Data anonymization techniques, such as pseudonymization and generalization, can help protect individual identities. Differential privacy adds noise to data, making it harder to infer specific individual information. Federated learning allows models to be trained on decentralized data, reducing the need for centralized data storage.

Data minimization principles can limit the collection and retention of data to only what is necessary for the AI system’s function.

Examples of Successful Privacy-Preserving AI Techniques

Several examples demonstrate the successful application of privacy-preserving AI techniques. Federated learning has been used to train machine learning models on medical data without sharing sensitive patient information. Homomorphic encryption allows computations to be performed on encrypted data, protecting the confidentiality of the underlying data. Differential privacy has been applied to various applications, such as location tracking, enhancing privacy without significantly affecting the accuracy of results.

Comparison of Privacy-Enhancing Technologies

| Privacy-Enhancing Technology | Description | Strengths | Weaknesses |

|---|---|---|---|

| Differential Privacy | Adds noise to data, making it harder to infer specific individual information. | Effective in protecting individual privacy, allows for statistical analysis. | Can reduce the accuracy of the analysis, may not be suitable for all applications. |

| Federated Learning | Trains machine learning models on decentralized data, without centralizing it. | Preserves user privacy, improves data security. | May be less efficient in some cases, data heterogeneity can be a challenge. |

| Homomorphic Encryption | Allows computations to be performed on encrypted data without decrypting it. | Maintains data confidentiality, suitable for sensitive data. | Can be computationally expensive, limited in the types of computations supported. |

| Data Anonymization | Removes or modifies identifying information from data. | Relatively simple to implement, cost-effective. | May not be suitable for complex analysis, potential for re-identification if not done carefully. |

Societal Impacts of AI

Artificial intelligence (AI) is rapidly transforming various aspects of our lives, presenting both remarkable opportunities and significant societal challenges. Understanding the potential impacts of AI on different communities and sectors is crucial for navigating the complexities of this evolving landscape responsibly. The ethical implications of AI extend beyond individual interactions; they profoundly affect societal structures, economic stability, and the very fabric of human relationships.

Job Displacement and Economic Inequality

The automation potential of AI raises concerns about job displacement across various industries. While AI can create new roles, the potential for significant job losses in existing sectors necessitates proactive strategies for workforce retraining and adaptation. This transition can exacerbate existing economic inequalities, potentially widening the gap between the skilled and unskilled labor forces. Addressing this requires policies that support upskilling initiatives and create opportunities for individuals to acquire the necessary skills for the evolving job market.

Governments and businesses have a shared responsibility in this endeavor. For instance, the rise of automated customer service systems has already impacted call center employment. However, the AI-driven creation of new roles in software development and data analysis is also a notable aspect of this transition.

Ethical Implications for Marginalized Communities

AI systems trained on biased data can perpetuate and amplify existing societal biases, disproportionately impacting marginalized communities. This can manifest in discriminatory outcomes in areas like loan applications, criminal justice, and even healthcare. Recognizing and mitigating these biases is essential to ensuring equitable access to AI-powered services and avoiding further marginalization. For example, facial recognition systems have been shown to have lower accuracy rates for people of color, raising concerns about their use in law enforcement.

Furthermore, AI systems can perpetuate stereotypes and reinforce existing societal prejudices if not carefully designed and monitored.

Methods to Address Societal Challenges

Several strategies can help mitigate the negative societal impacts of AI. Proactive workforce development programs, encompassing retraining and reskilling initiatives, are critical for adapting to the evolving job market. Transparent and accountable AI systems are essential to build public trust and ensure fair outcomes. Furthermore, ethical guidelines and regulations are needed to govern the development and deployment of AI systems.

These regulations should ensure that AI systems are used responsibly and avoid exacerbating existing inequalities.

Examples of AI Systems Benefiting Society

AI systems can contribute positively to societal well-being in numerous ways. For instance, AI-powered diagnostic tools in healthcare can improve accuracy and speed in disease detection. Furthermore, AI is instrumental in optimizing supply chains and logistics, potentially leading to reduced waste and increased efficiency. AI is also increasingly utilized in environmental monitoring, providing valuable data for conservation efforts.

These examples highlight the potential for AI to address pressing global challenges and enhance human well-being.

Table: Potential Societal Impacts of AI Applications

| AI Application Type | Potential Positive Impacts | Potential Negative Impacts |

|---|---|---|

| Healthcare Diagnostics | Improved accuracy and speed in disease detection, personalized medicine | Potential for bias in algorithms, lack of access for certain populations |

| Autonomous Vehicles | Increased safety, reduced traffic congestion, accessibility for disabled individuals | Job displacement for truck drivers, ethical dilemmas in accident scenarios, potential for misuse |

| Financial Services | Fraud detection, personalized financial advice, increased efficiency | Exacerbation of existing financial inequalities, potential for discriminatory lending practices |

| Environmental Monitoring | Improved environmental data collection, faster response to climate change | Potential for data misuse or misinterpretation, dependence on technology |

AI in Healthcare

Artificial intelligence (AI) is rapidly transforming healthcare, offering potential benefits in diagnosis, treatment, and research. However, its implementation necessitates careful consideration of ethical implications, particularly regarding patient privacy, data security, and potential biases. This section explores the ethical considerations surrounding AI in healthcare, including examples of applications, methods for ensuring ethical use, and the crucial role of patient consent and data privacy.AI’s potential to enhance healthcare is substantial.

From early disease detection to personalized treatment plans, AI can contribute significantly to improved patient outcomes. However, the integration of AI necessitates a robust ethical framework to mitigate potential risks and ensure equitable access to these advancements.

Ethical Considerations in Medical Diagnosis

AI algorithms, trained on vast datasets of medical images and patient records, can aid in diagnosing diseases with remarkable accuracy. However, the inherent biases within these datasets can lead to discriminatory outcomes. For instance, if a dataset predominantly features images from a specific demographic group, the AI may perform less effectively on images from other groups. Ensuring diverse and representative datasets is critical to mitigate this risk and ensure fairness in diagnosis.

Ethical Considerations in Treatment

AI can personalize treatment plans by analyzing individual patient data, leading to more effective and efficient care. However, concerns arise regarding transparency and explainability. If an AI recommends a particular treatment, understanding the rationale behind the recommendation is essential for patient trust and informed decision-making. Explainable AI (XAI) techniques are crucial for addressing this issue.

Ethical Considerations in Research

AI accelerates research by enabling faster analysis of large datasets. This can lead to breakthroughs in drug discovery and treatment development. However, ensuring data privacy and informed consent from research participants is critical. Protecting sensitive patient data from unauthorized access and misuse is paramount.

Examples of AI Applications in Healthcare with Ethical Concerns

- AI-powered diagnostic tools: An AI system trained on a dataset biased towards a particular ethnicity may misdiagnose conditions in patients from other ethnic backgrounds. This highlights the importance of dataset diversity and bias mitigation.

- AI-driven treatment recommendations: An AI system that recommends a treatment without sufficient transparency regarding its decision-making process could undermine patient trust and informed consent. The ability to explain the AI’s reasoning is essential for appropriate clinical decision-making.

- AI-assisted drug discovery: AI algorithms used to identify potential drug candidates may overlook certain patient populations, leading to inequitable access to potentially life-saving treatments. This underscores the need for diverse datasets in drug discovery research.

Methods for Ensuring Ethical Use of AI in Healthcare

Robust guidelines and regulations are essential to ensure the ethical use of AI in healthcare. These include establishing clear standards for data privacy, ensuring transparency and explainability in AI systems, and mandating rigorous testing and validation procedures. Moreover, fostering collaboration between AI developers, healthcare professionals, and ethicists is vital for developing responsible AI solutions.

Importance of Patient Consent and Data Privacy

Patient consent is fundamental in AI-driven healthcare. Patients must be fully informed about how their data will be used and have the right to refuse participation. Data privacy regulations, like HIPAA in the US, must be adhered to, protecting sensitive patient information from unauthorized access and misuse. Strong encryption, access controls, and secure storage methods are crucial for safeguarding patient data.

Table Comparing AI Applications in Healthcare and Ethical Implications

| AI Application | Ethical Implications |

|---|---|

| AI-powered diagnostic tools | Potential bias in datasets, lack of transparency, lack of patient control over data |

| AI-driven treatment recommendations | Lack of transparency in decision-making, potential for overreliance on AI, potential for misdiagnosis |

| AI-assisted drug discovery | Potential for overlooking certain patient populations, lack of diversity in datasets, issues with patient data privacy |

| AI-powered robotic surgery | Potential for errors in execution, lack of human oversight, liability issues in case of complications |

AI in Autonomous Systems

Autonomous systems, encompassing vehicles, robots, and drones, are rapidly evolving, prompting crucial ethical considerations. These systems, while promising efficiency and potential for improved safety, present intricate dilemmas that demand careful attention and proactive solutions. The decision-making processes within these systems require careful examination to ensure ethical alignment with human values and societal well-being.

Ethical Dilemmas in Autonomous Systems

Autonomous systems, particularly in transportation, raise profound ethical concerns. Predicting and mitigating potential conflicts between human lives and minimizing overall harm is a significant challenge. This includes issues of responsibility in accidents, fairness in decision-making algorithms, and the potential for bias in these systems.

Ethical Considerations for Decision-Making in Autonomous Systems

The algorithms governing autonomous systems must be designed with ethical considerations at their core. Transparency in the decision-making processes of these systems is paramount. This means making the rules and criteria used by the AI transparent and understandable to ensure accountability. Furthermore, the systems should be robust against manipulation and bias, aiming for fairness in outcomes. Ultimately, the goal is to ensure that the autonomous system acts in a way that aligns with societal values and expectations.

Examples of Potential Ethical Conflicts in Autonomous Systems

Several scenarios illustrate the potential for ethical conflicts in autonomous systems. For instance, a self-driving car encountering a pedestrian in an unavoidable accident might be programmed to choose the outcome that minimizes harm. However, the ethical dilemma arises when determining which potential outcome leads to less harm, such as choosing between hitting a child or an elderly person.

Another example is in robotic surgery, where a robot may need to choose between preserving the health of the patient or preventing damage to the surrounding tissue. The programming of these systems must consider these nuanced scenarios to promote the well-being of all involved parties.

Ethical considerations in AI are crucial, especially when considering the vast datasets processed by AI systems. Data analytics tools, like those found here Data analytics tools , are essential for building AI models. However, the ethical implications of how these tools are used and the data they analyze need careful scrutiny to ensure responsible AI development.

Methods for Developing Ethical Guidelines for Autonomous Systems

Establishing ethical guidelines for autonomous systems requires a multi-faceted approach. First, robust frameworks need to be developed that consider the specific context of the system and its intended use. This necessitates the participation of ethicists, engineers, legal experts, and members of the public. A key aspect is developing a standardized approach for evaluating and auditing the ethical implications of AI systems.

Further, incorporating principles of fairness, transparency, and accountability into the design and implementation of these systems is critical.

Table: Potential Ethical Issues and Solutions in Autonomous Systems

| Ethical Issue | Potential Solution |

|---|---|

| Unforeseen Circumstances: Autonomous systems may encounter situations not accounted for in their programming. | Robust Testing and Simulation: Rigorous testing in various scenarios, including unexpected events, to identify and mitigate potential issues. |

| Bias in Algorithms: Algorithms might exhibit biases that result in unfair or discriminatory outcomes. | Diverse Data Sets: Using data sets that represent diverse populations to train algorithms, ensuring fairness and inclusivity. |

| Lack of Transparency: The decision-making processes of autonomous systems may be opaque. | Explainable AI (XAI): Developing techniques that make the decision-making process of autonomous systems more understandable and explainable. |

| Accountability in Accidents: Determining who is responsible for accidents involving autonomous systems. | Clear Legal Frameworks: Establishing clear legal frameworks that address liability and responsibility in autonomous systems accidents. |

International Cooperation on AI Ethics: Ethics In Artificial Intelligence

Global collaboration on AI ethics is crucial given the rapid advancement and widespread deployment of AI technologies. Diverse cultural contexts, legal systems, and societal values necessitate international frameworks to ensure responsible AI development and deployment. Addressing potential risks and maximizing benefits requires a coordinated global effort.International cooperation on AI ethics is essential to establish consistent standards and regulations.

This coordinated approach helps prevent the development and deployment of AI systems that could exacerbate existing inequalities or pose unforeseen risks. Shared guidelines and principles promote ethical AI practices across borders, fostering trust and responsible innovation.

Need for International Collaboration

Establishing common ground on AI ethics is vital to mitigate potential risks. Harmonized standards help ensure AI systems are developed and deployed responsibly across different jurisdictions. This fosters trust in AI systems, preventing conflicts and misinterpretations stemming from divergent ethical frameworks.

Challenges in Achieving Global Consensus

Significant challenges exist in achieving global consensus on AI ethics. Different nations have varying levels of technological development, economic disparities, and diverse cultural norms, all of which influence ethical considerations. Disagreements on specific ethical principles, interpretations of risks, and the appropriate regulatory mechanisms further complicate the process.

Strategies for Promoting Ethical AI Practices Across Borders

International organizations, such as the United Nations, can play a crucial role in fostering cooperation on AI ethics. Promoting dialogue and knowledge-sharing between nations through workshops, conferences, and other platforms is essential. Developing standardized benchmarks for ethical AI systems can facilitate comparison and adaptation across various contexts. Furthermore, establishing international cooperation on data sharing practices is critical for responsible AI development.

Examples of International Initiatives Addressing AI Ethics

Several international organizations are actively working on AI ethics, including the OECD, which has developed guidelines on AI principles. The European Union has also implemented various regulations, like the GDPR, which indirectly influence AI ethics. The UN, through its various agencies, is also working to address AI ethical concerns.

Summary of International Agreements and Guidelines on AI Ethics

| Organization | Agreement/Guidelines | Key Focus |

|---|---|---|

| OECD | AI Principles | Promoting fairness, accountability, transparency, and human oversight in AI systems. |

| European Union | GDPR, AI Act | Protecting user privacy and promoting ethical considerations in AI development, particularly in sensitive areas like healthcare and finance. |

| United Nations | Various resolutions and reports | Addressing AI’s global impact, including potential risks to human rights, and fostering international cooperation. |

Future Directions in AI Ethics

The rapid advancement of artificial intelligence necessitates a proactive and anticipatory approach to ethical considerations. Future directions in AI ethics require a holistic understanding of the potential societal impacts and the development of robust frameworks to navigate the complex challenges that lie ahead. This involves anticipating emerging ethical dilemmas, exploring innovative solutions, and fostering ongoing dialogue between researchers, policymakers, and the public.

Emerging Trends and Challenges

The evolving landscape of AI presents a multitude of emerging trends and challenges. These include the increasing complexity of AI systems, the potential for biased algorithms to perpetuate societal inequalities, the lack of transparency in decision-making processes, and the difficulty in establishing accountability for AI-driven actions. Furthermore, the integration of AI into critical sectors like healthcare and autonomous vehicles raises significant ethical concerns about safety, responsibility, and the potential displacement of human workers.

These concerns demand immediate attention and careful consideration to ensure that AI benefits society as a whole.

Potential Future Directions for Research and Development

Future research and development in AI ethics should focus on developing more robust and adaptable ethical frameworks. This includes the creation of standardized benchmarks for evaluating the ethical implications of AI systems, the development of methods for detecting and mitigating bias in algorithms, and the implementation of techniques to enhance the transparency and explainability of AI decision-making processes. Furthermore, exploring the use of AI to detect and prevent ethical violations in other AI systems is crucial.

Methods for Anticipating and Mitigating Future Ethical Concerns

Proactive strategies for anticipating and mitigating future ethical concerns in AI involve fostering a culture of ethical awareness within the AI research community. This includes establishing clear ethical guidelines and best practices for AI development, implementing robust mechanisms for ethical review of AI systems, and promoting interdisciplinary collaboration between AI researchers, ethicists, policymakers, and the public. Open dialogue and transparent communication about potential ethical dilemmas are paramount.

Moreover, incorporating ethical considerations into the design and development process from the outset can significantly reduce the risk of unforeseen ethical issues.

Innovative Approaches to Address Emerging Ethical Issues

Innovative approaches to address emerging ethical issues in AI include developing AI systems that are explicitly designed to promote fairness and equity. Furthermore, exploring the use of game theory and other decision-making frameworks to create AI systems that align with societal values and goals is also essential. The use of explainable AI (XAI) techniques can increase transparency and build trust in AI systems, which is critical to fostering public acceptance and responsible development.

Forecasting Future Ethical Dilemmas and Potential Solutions

| Future Ethical Dilemma | Potential Solution |

|---|---|

| Bias in AI loan applications leading to discriminatory outcomes. | Developing algorithms that explicitly consider factors other than credit history in loan applications, while maintaining fairness and equity. |

| Autonomous vehicles causing harm in unforeseen circumstances. | Developing safety protocols and backup systems for autonomous vehicles to mitigate risks, while continuously evaluating and improving the algorithms. |

| Lack of transparency in AI-driven medical diagnoses. | Developing XAI methods to explain the reasoning behind AI-driven diagnoses, enabling clinicians to understand the decision-making process. |

| AI-driven job displacement leading to social unrest. | Investing in retraining and upskilling programs to prepare workers for the changing job market and provide support for those impacted by AI. |

Last Point

In conclusion, navigating the ethical landscape of artificial intelligence demands a multifaceted approach. By understanding the diverse ethical frameworks, acknowledging potential biases, and prioritizing transparency and accountability, we can strive towards developing AI systems that benefit humanity. This discussion highlights the need for ongoing dialogue, international collaboration, and continuous evaluation of AI’s impact to ensure its ethical and responsible use in the future.

FAQ Insights

What are some common ethical concerns surrounding AI in healthcare?

AI in healthcare raises concerns about data privacy, patient consent, and potential algorithmic bias in diagnosis and treatment recommendations. The need for robust ethical guidelines and regulations is crucial to ensure responsible and equitable application.

How can we ensure fairness in AI systems?

Addressing fairness in AI requires careful consideration of potential biases in training data and algorithms. Techniques like data augmentation, diverse datasets, and ongoing monitoring can help mitigate bias and promote fairer outcomes.

What role do international organizations play in AI ethics?

International collaboration is vital for establishing common standards and regulations for AI ethics. Organizations like the OECD and the EU are working to foster global consensus on ethical AI practices.

What are the potential societal impacts of AI job displacement?

AI-driven automation may lead to job displacement in certain sectors. This necessitates proactive measures for workforce retraining, upskilling, and economic diversification to address the potential social and economic ramifications.